Introduction and Problem

What is HBase? What is Region Servers? How do these look like as a service in Real Hadoop and big data Environment? What are the issues/Use Cases related to HBase? Let's start and take a look at HBase, Region Servers and one of the Use Case/Issue related to it.

What is HBASE?

When we need real-time random read/write access to our already existing Hadoop Data then we need Apache HBase.

This data can be migrated from any one of the already existing technologies like Sqoop etc. before, becoming a Use Case for HBase.

Moreover, through HBase (Hadoop Database) we can store tables much larger than billions of rows and millions of columns, where it has the edge of relative databases other than real-time data retrieval.

What are RegionServers?

RegionServers are the daemons used to store and retrieve Hadoop data in HBase, Simple?

In Hadoop production/QA/Test environments each RegionServers are deployed on its own dedicated compute node.

Once we start using HBase we create a table just like SQL/Hive (syntax difference) and then begin storing and retrieving our data.

Once these HBase tables grow beyond a threshold limit HBase system automatically starts splitting the table and distributing the data load to other RegionServers.

The above process is called auto toe-sharding, in which Hbase automatically scales because we add more data to the system, which is a big advantage compared to most DBMS, which requires our manual intervention to measure the overall system from one server to the next.

Also scaling is automatic as long as we have in the rack another spare server that’s configured.

Additionally, we should not set a limit on tables and then split them as HDFS is the underlying storage mechanism hence all available disks in the HDFS cluster are available for storing our tables. (replication factor not counted here)

We should not limit ourselves to just one RegionServer to manage our tables when we have an entire cluster at our disposal.

Below are few screenshots how HBASE and Region Server Services looks like in any given monitoring tool (shown for Cloudera Manager)

HBase Service in Cloudera Manager:

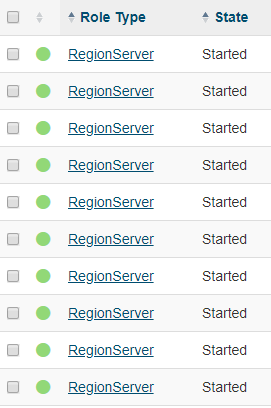

Region Server Services in Cloudera Manager:

Now that we know what HBase, regional servers are and how they look like a service in a real Hadoop cluster environment, let’s talk about the use case, an issue related to the field server that has many field servers down in our Hadoop. Cluster, we tried restarting the single region server, which failed, and then tried restarting the HBase service, which also failed. What to do in this tough situation? How to bring HBase service and Region Server back to normal condition. We will talk about this issue/scenario in detail below:

Issue: Region servers down and not coming up even after restart.

HThought Process and Implementation Steps:

This kind of issue can come due to many issues and the root cause can be different. Today we will talk about a specific root cause that I have faced quite a few times and can help you guys also one or the other day.

There is a location where HBase puts its backup files and many times either it's fully utilized or one of the contents of that directory is corrupted. That location is:

“/hbase/WALs” or “/hbase/OLDWALs”

We need to check Hbase logs at “/var/logs/hbase/” path location and in this specific scenario error logs should be related to error:

“Failed to archive/delete all the files for /hbase/WALs/region:ph_sears_prcm_curr_future” OR “Failed to remove file for /hbase/WALs/region:ph_sears_prcm_curr_future”

If the error looks something like the above then follow the below steps to resolve the issue:

- Stop all roles for affected node.

-

Move content of hdfs location “/hbase/WALs/” according to the failed node to backup location e.g. /user/hdfs.

e.g. if issue is related to host “trphsw4-11.hadoop.searshc.com” then move file:- “/hbase/WALs/trphsw4-11.hadoop.searshc.com,60020,1475072849247” to backup location “user/hdfs”

- Restart Region server of affected node.

- Region Server role should be now successfully up and running.

- Start other roles for affected node accordingly.

- Monitor logs for further investigation accordingly.

Backup Implementation Plan:

If even after performing the above steps Region Server is not get started then perform the below steps:

- Move the file from Backup directory “/user/hdfs” to “/hbase/WALs” directory.

- File should be same as taken Backup according to the Implementation Plan.

- Monitor logs for further investigation accordingly.

This blog written by team of senior big data and Hadoop developers from NEX Softsys, you can hire our developer to take the most advanced business development solutions to discover new possibility from your data. We provide Full-Fledged Custom Big Data Development & Consulting Services.