In this article, Java J2ee development experts are sharing the concept of Spring Cloud. You will get to know about its feature also. Read this post to know the function and major components of Spring Cloud Data flow.

Technology: Spring Cloud Data Flow is a cloud-native orchestration service for compostable microservice applications on modern runtimes. With Spring Cloud Data Flow, developers can create and orchestrate data pipelines for common use cases such as data ingest, real-time analytics and data import/export. It is deployment/ hosting tool for java based micro services and also dashboard/monitor for java based applications to control behavior and health check of java based micro services and also it provides multiple instances of the same applications and also it provides the full details of the executions triggered by user either from UI or from shell.

Spring Cloud Data Flow is aimed to simplify the large applications to multiple easily integrated microservices. It cloud design of Spring XD framework. These applications can run independently and they can be easily integrate using Spring Cloud Data flow.

Spring Cloud data flow can be deployed in local and various cloud deployment providers like Cloud Foundry, Apache YARN, Apache Mesos and Kubernetes.

Spring Cloud data flow comes with collection of best practices and best collection of microservices based distributed streaming, task and batch applications.

Features of Spring Cloud Data flow:

- We can Stream using Drag-drop GUIs.

- Creating, trouble shoot and manage microservices in isolation.

- We can build data pipe lines using out-of-box stream and task/batch applications.

- Spring Cloud dataflow will consume microservice as maven artifact or docker image.

- We can monitor metrics, health check of each microservice application.

Introduction to Spring Cloud Data Flow:

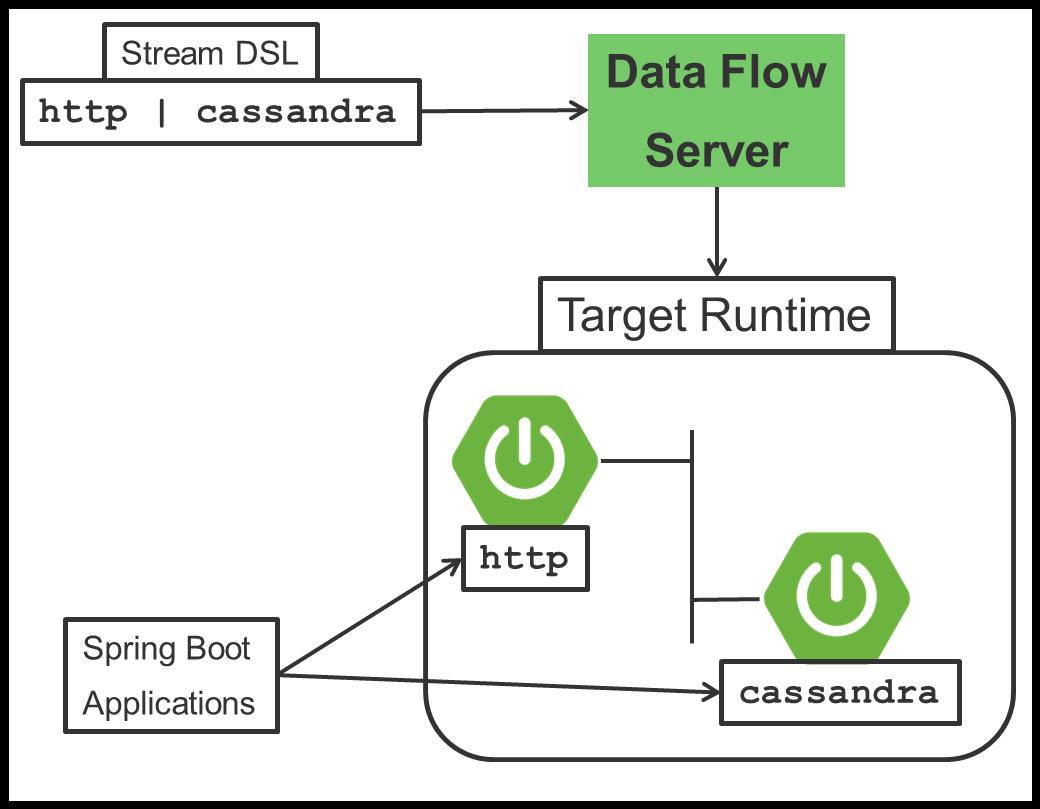

Spring Cloud Data flow simplifies the development and deployment of the data oriented applications. Major components in Spring Cloud data flow is the Data flow server, applications and target run time.

1) Applications:

Applications will be of two types.

A. Short lived applications:

this type of applications can be run for short amount of time and then it will terminate. Example: Spring Cloud Task, it will for whenever we try to execute the task it will launched and then it terminate after the task, same for batch applications.

B. Long lived applications:

this type of applications will be run for unbounded amount of time, majorly these applications are message based applications.

Example:

Spring Cloud Stream, this type of applications will consume messages and produce messages to data flow server configured message broker.

2) Application packaging:

the target run time will be of two types.

A. Spring boot jar file:

we can specify either spring boot jar or any http URL pointing to spring boot jar file or maven artifact.

B. Docker Image:

we can also specify the docker image url for micro service applications.

3) Runtime:

the target system run time where our applications will be executed. The list of supported run times are:

- Cloud Foundry

- Apache YARN

- Kubernetes

- Apache Mesos

- Local Server for development

We can also deploy in other run time environments by extending data flow server using deployer Service Provider Interface (SPI).

Data flow server will interpret

- DSL Stream, logical flow of data that flowing through multiple applications.

- Deployment manifest is responsible for specifying no.of instances of the applications, memory management for application and also data partition of the application.

DSL Stream:

Example DSL: http|casandra

Here http, casandra are the names registered in data flow server, http application will be a source type of application, casandra application will consume messages from http application. The pipe symbol represent the communication between applications. The communication between applications using middleware message brokers like Apache Kafka, RabbitMQ. Middle ware message brokers are responsible for creating topics/queue dynamically.

Running Data flow server locally:

Data flow server depends redis server, you can download redis server from http://download.redis.io/releases/redis-3.2.5.tar.gz

You need to extract the zip and you can start the redis server using below command:redis-server.exe redis.windows.conf

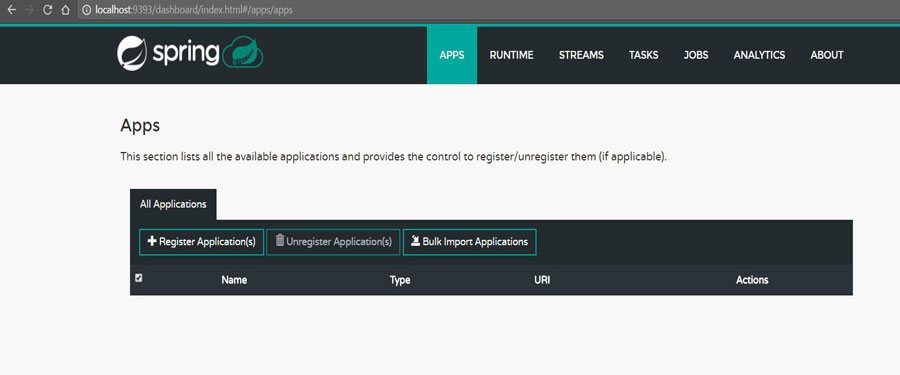

You can download the spring dataflow server local jar file from maven repository https://repo.spring.io/release/org/springframework/cloud/spring-cloud-dataflow-server-local/1.1.0.RELEASE/spring-cloud-dataflow-server-local-1.1.0.RELEASE.jar You can start the server locally using java – jar spring-cloud-dataflow-server-local-1.1.0.RELEASE.jar by default spring dataflow server will run on 9393 port, after the successful start, open http://localhost:9393/dashboard in your favorite browser, you can see the similar to below screen.

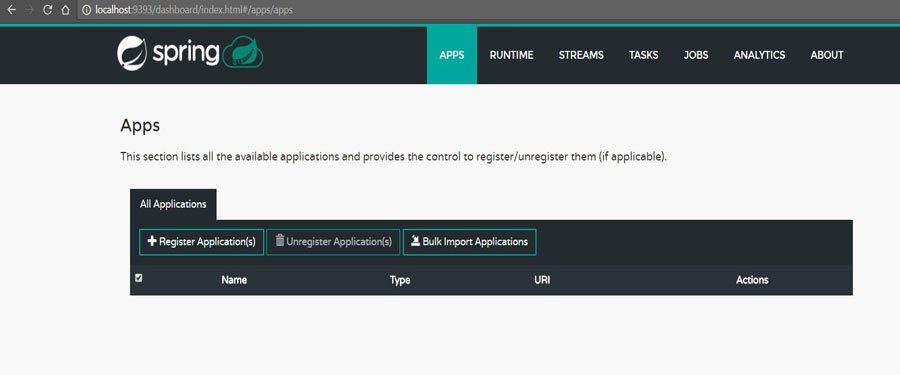

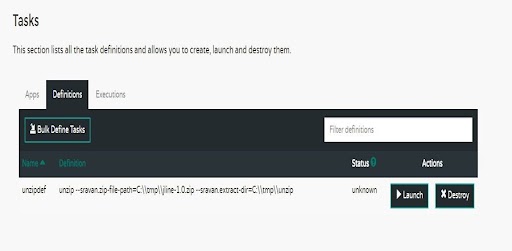

We can write custom microservices of type source, task, sink, processor and we can register with data flow server. We will get know how to develop this type of applications in later blogs. If we register any task type of application we will get alert below shown in image.

Go to TASKS menu, apps tab then you will find the registered app.

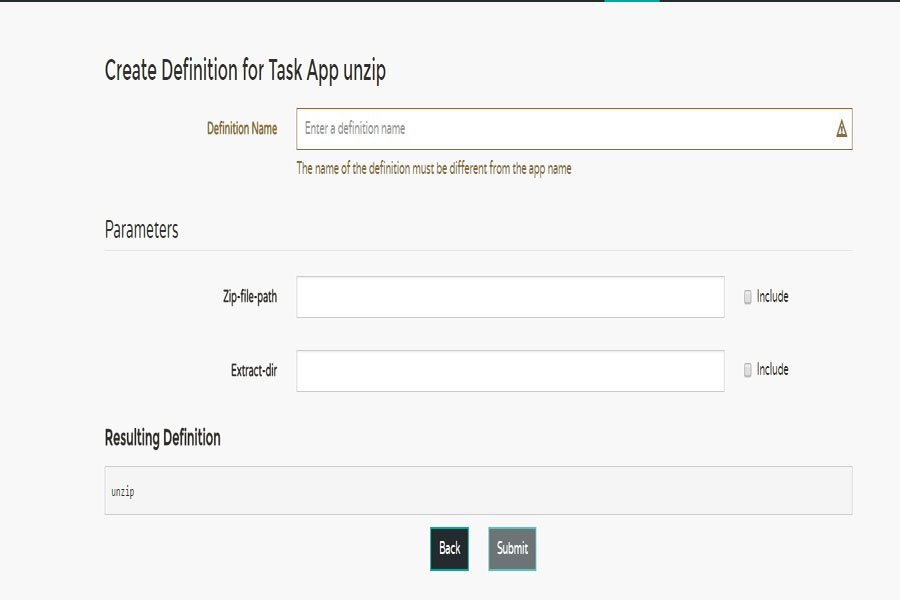

Then click create definition on registered app, then you will find the below screen.

Definition name: we can supply any text which will be identity the task.

Parameters sections contains what are the parameters are required to run the task, if those parameters are mandatory then we can click on Include checkbox and submit.

Then we can launch the task using launch button.

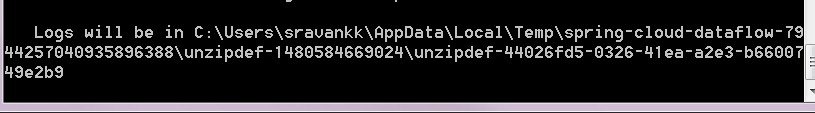

The log folder path we can observe in the server console for debugging purpose. Spring Data flow Shell: we can also connect dataflow server using spring shell provided by spring data flow shell. We can download the spring dataflow shell from spring repo using the URL:

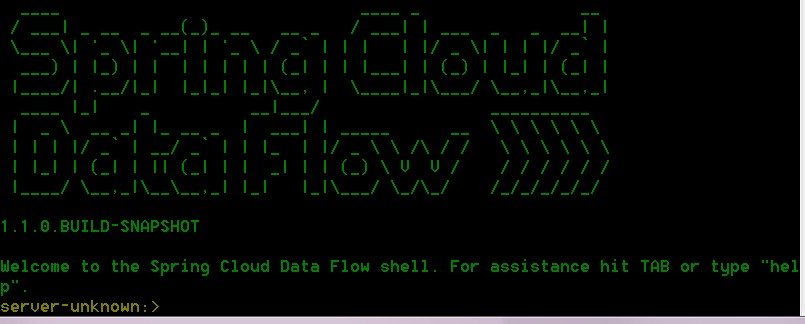

https://repo.spring.io/release/org/springframework/cloud/spring-cloud-dataflow-shell/1.1.0.RELEASE/spring-cloud-dataflow-shell-1.1.0.RELEASE.jar We can launch the spring data flow shell using java –jar spring-cloud-dataflow-shell-1.1.0.RELEASE.jar

Then you can observe the below image,

If the data flow server is up and running on port 9393 the shell automatically connect, otherwise we can also connect to data flow server explicitly using below command:

Spring cloud data flow is providing server with easily deployable microservices dynamically, we can easily integrate the microservices using piping with middleware message brokers. We can monitor the metrics, health, memory of the each of the deployed microservices .dataflow will also providing shell to managing the same from command prompt.

Java Web Development companies have shared the concept of Spring Cloud in this post. You can follow the guidelines they have shared here to understand the Spring Cloud and its features. For further queries, write to experts in comments.

Recent Blogs

Categories